Algorithms for Seeing

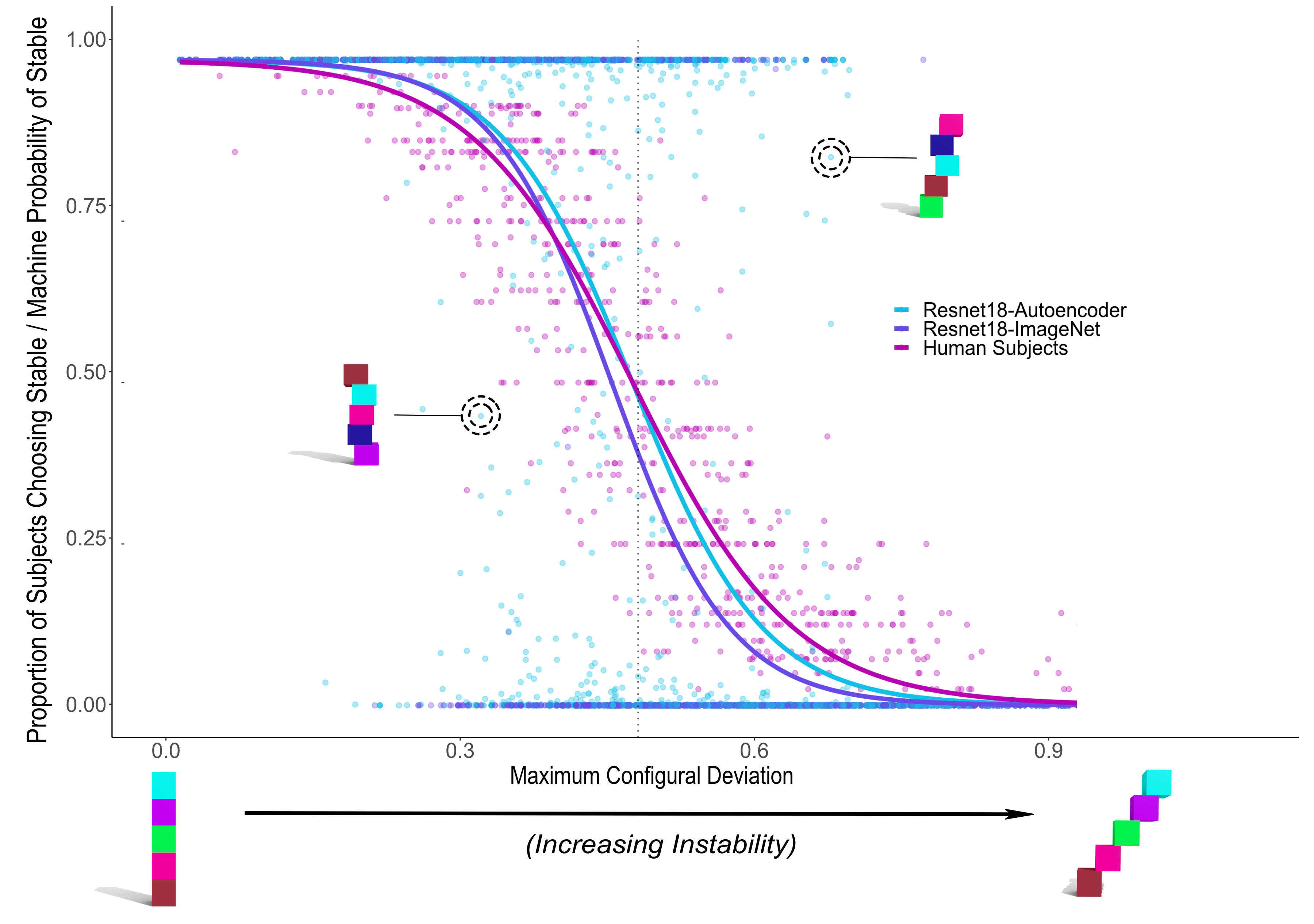

The overarching goal of the Vision Sciences Lab is to understand how the mind and brain construct perceptual representations, how the format of those representations impacts visual cognition (e.g., recognition, comparison, search, tracking, attention, memory), and how perceptual representations interface with higher-level cognition (e.g., judgment, decision-making and reasoning).

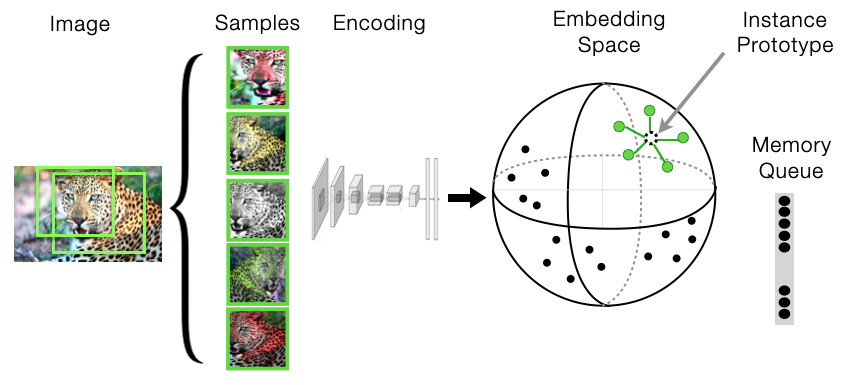

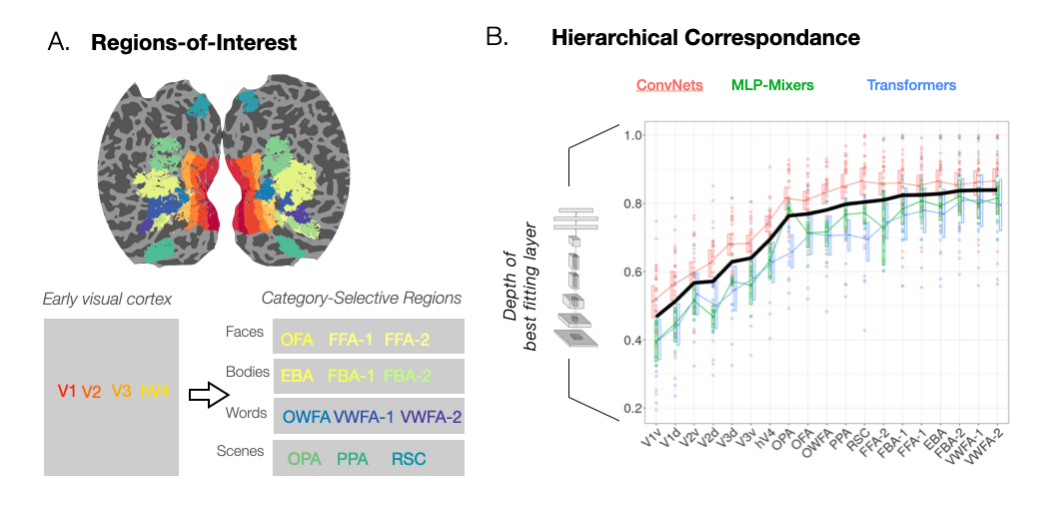

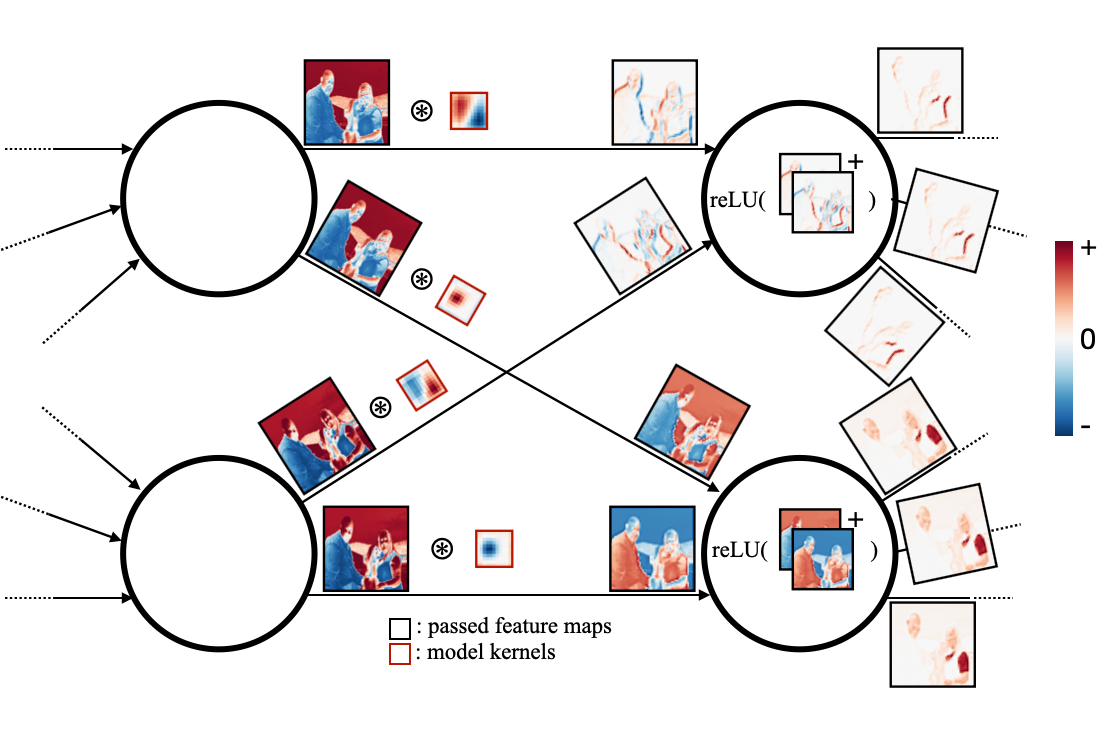

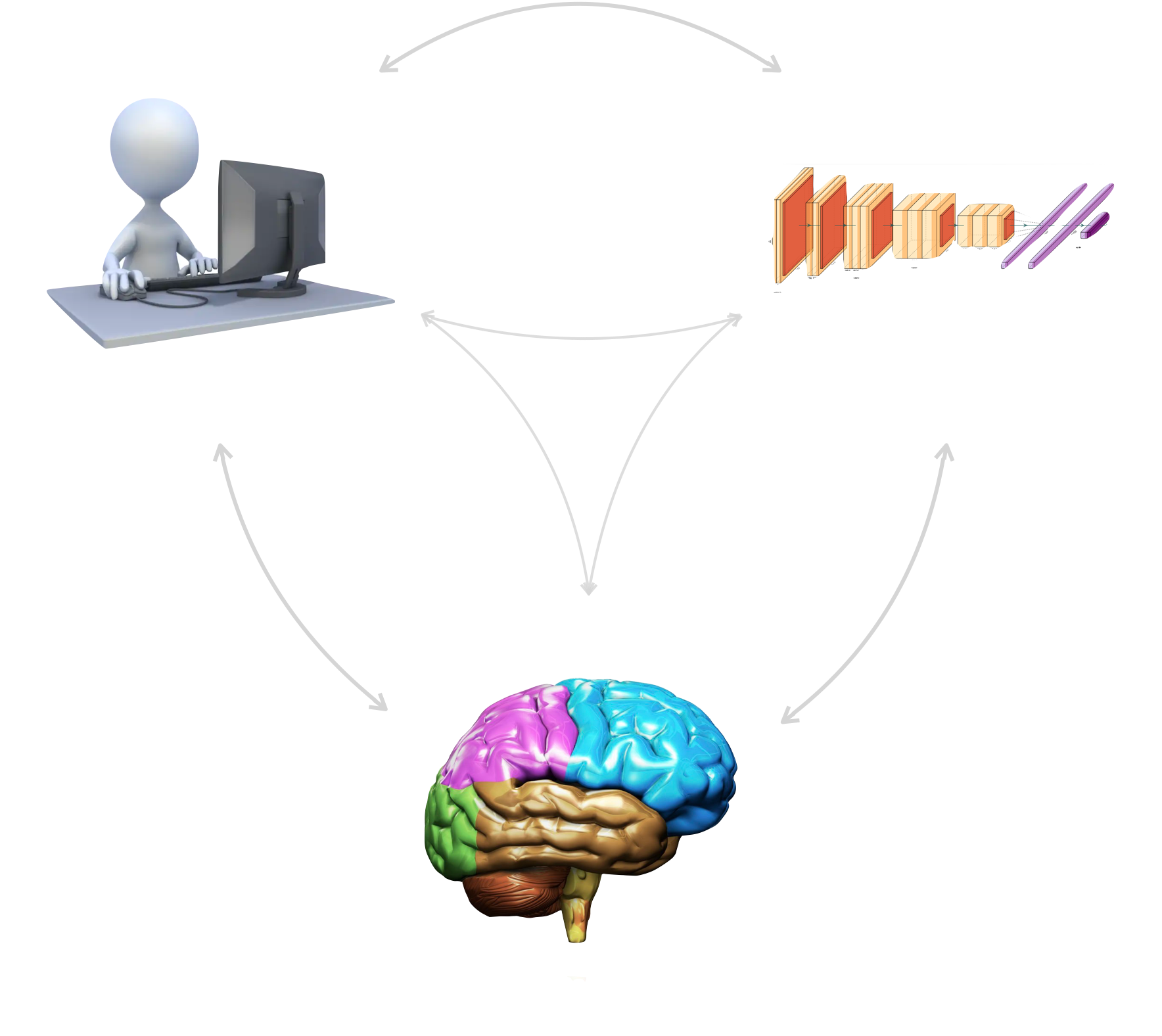

To this end, ongoing projects in the lab leverage advances in deep learning and computer vision, aiming to understand how humans & machines encode visual information at an algorithmic level, and how different formats of representation impact visual perception and cognition. Towards this end, we import algorithmic and technical insights from machine vision to build models of human vision, and apply theories of human vision and the "experimental scalpel" of human vision science to probe the inner workings of deep neural networks and build more robust and human-like machine vision systems. Ultimately we hope to contribute to the virtuous cycle between the fields human vision science, cognitive neuroscience, and machine vision.

My early research focused on characterizing and understanding limits on our ability to attend to, keep track of, and remember visual information — our visual cognitive capacities. In many cases, deeper understanding of these limits seemed to demand a deeper understanding of visual representation formats, but these ideas were not easily testable, because our field had not yet developed scalable, performant models of visual encoding beyond relatively early visual processing stages.

However, since 2012, we have seen a veritable explosion in the availability of highly performant vision models from the fields of deep learning, machine vision, and artificial intelligence (or is that one field?). On a quarterly basis, new models with new algorithms and new abilities are released, each presenting intriguing hypotheses for the nature of visual representation in humans, and opportunities for a deeper understanding of visual cognition in both humans and machines.

Thus, ongoing work in the lab is focused primarily on this intersection between human and machine vision. See the page for details about this work.